pitch / investor decks 🀄︎ 🀅 🀆

*powered by iSPARX™

from screens to spaces

• the magical experience of AR+IQ *powered by iSPARX™

*from Screens to Spaces

The evolution of human-computer interaction is accelerating. For decades, we've engaged with digital experiences through screens - flat, two-dimensional interfaces that disconnect us from our physical environment.

AR+IQ represents a fundamental shift: the intelligence layer that transforms the real world itself into an interactive, responsive medium. This transition is not speculative - it's already underway with mature technology, ready devices, and accelerating enterprise demand.

Adaptive AI Agents

Definition: Intelligent systems that understand context and user intent in real-world environments.

Description:

AR+IQ’s adaptive AI agents go beyond static responses to become situationally aware, context-sensitive entities. Built using GPT-4o and custom-trained multimodal models, these agents analyse sensory inputs — including user behaviour, environmental cues, and historical interaction data — to deliver personalised, real-time engagement. Whether guiding users through a cultural exhibit, assisting with retail decisions, or navigating event spaces, these agents operate as responsive, location-aware assistants. They continuously learn and adapt using machine learning techniques, offering increasingly intuitive support with each interaction.

Key Features:

Multi-modal understanding (voice, text, gesture, gaze)

Personalisation engine informed by telemetric data (opt-in)

Integration with Unity SDK for real-time environment interaction

Designed to operate ethically, ensuring user privacy and data sovereignty

Geospatial Anchoring

Definition: Precise location-based digital content that integrates seamlessly with physical spaces.

Description:

Geospatial anchoring within AR+IQ uses a combination of GPS, LiDAR, and spatial computing to attach digital assets to exact physical coordinates. This enables persistent, place-based content that remains stable across user devices and visits. From augmented heritage trails to in-store product visualisations, AR+IQ ensures that the virtual is meaningfully tied to the physical. It supports scalable deployment in outdoor and indoor environments, facilitating narrative layering across real-world locations with high fidelity.

Key Features:

ARKit/ARCore integration for environmental understanding

CMS tools for location-specific content management

Persistent world anchoring across sessions and users

Compatibility with Apple Vision Pro, Meta Quest, and Android XR

Co-Creation & Storytelling

Definition: Collaborative tools that enable creators and audiences to shape immersive narratives together.

Description:

AR+IQ empowers shared authorship through tools that allow users to contribute to, remix, or extend immersive stories. Whether through workshops with cultural leaders or participatory installations in public spaces, the platform supports collaborative narrative construction. Stories are not fixed scripts but adaptive structures shaped by collective input. These co-creation tools are essential in education and culture contexts, where authenticity and community voice are paramount. The system is also designed to support creative professionals with low-code authoring environments and real-time content testing.

Key Features:

Voice and gesture-based interaction layers

Story templates and modular narrative frameworks

Integration with the CMS for live content updates

Supports community-based and kaupapa Māori-driven design practices

boutique software

• SaaS+SAM software development and management *powered by iSPARX™

SaaS+SAM software boutique

The SaaS+SAM boutique develops small-scale, high-impact software grounded in ethical design and composable architecture. It focuses on tools that solve specific operational problems while remaining scalable, lightweight, and secure. Each product is built with a modular framework that supports data sovereignty, responsible AI use, and flexible integration with existing systems.

Workflow and Compliance Automation

Definition: Software systems that automate verification, onboarding, and audit processes while meeting strict regulatory requirements.

Description:

This vertical provides modular tools that streamline compliance-heavy workflows and reduce manual processing. Each component is built to support AML verification, lending assessments, and structured onboarding, using secure data handling and clear procedural logic. The systems are designed to maintain regulatory accuracy, uphold transparency, and meet New Zealand’s privacy and financial compliance standards. They integrate with existing infrastructure through an API-first approach, ensuring reliable operation and straightforward adoption across different organisational contexts.

Key Features:

Automated AML and identity verification modules

Structured onboarding and lending pipelines

Data-secure audit trails with full traceability

API-based integration for financial and compliance systems

Designed to align with NZ privacy regulations and reporting requirements

Education, Capability and Skills Platforms

Definition: Digital learning systems that deliver structured content, personalised recommendations, and skills-based assessments across devices.

Description:

This vertical develops platforms that support educators, cultural organisations, and enterprise teams through adaptive learning tools and capability frameworks. The systems integrate micro-learning engines, recommendation models, and psychometric insights to create personalised pathways that respond to user progress and context. Each platform is designed for ethical data handling, accessibility, and clarity, ensuring that learners engage with content in a way that is meaningful, transparent, and easy to navigate. The architecture is modular, allowing providers to expand curricula, update resources, and integrate new assessment methods without disruption.

Key Features:

Micro-learning modules for focused skill development

Recommendation systems informed by learner behaviour and goals

Psychometric profiling with adaptive content delivery

Cross-device accessibility and responsive design

Ethical data controls aligned with educational privacy expectations

Creative and Spatial Tools

Definition: Software systems that support digital storytelling, media management, and spatial content deployment across cultural and creative contexts.

Description:

This vertical focuses on tools designed for artists, cultural institutions, and organisations working with immersive and location-based media. The systems include lightweight CMS platforms, structured media catalogues, and companion applications tailored to integrate with AR+IQ installations. Each tool enables creators to organise, publish, and analyse spatial content without needing specialist technical skills. The architecture supports clear asset management, consistent metadata structures, and reliable deployment pipelines, ensuring that immersive work can be maintained, iterated, and shared with confidence.

Key Features:

Lightweight CMS for managing spatial and media assets

Structured catalogues with metadata for preservation and search

Companion tools aligned with AR+IQ workflows

Support for spatial storytelling and location-aware content

Designed for ease of use, reducing technical overhead for creators

Entertainment

Sweet Orange

The fan-facing mobile application that transforms how music lovers discover, experience, and engage with live events.

BETApreview

beta download for iOS & Android

Art & Culture

Matariki.App™

The Matariki.App™ is an event index where you can discover & retrieve the details of events in the regional schedule of the Matariki & Puanga, Waitangi Day & throughout the year.

The Matariki.App™ is designed to enhance the event experience for users & provide an effective platform for delivery of immersive media in a Matariki real-time 3D environment.

*powered by iSPARX™

Retail

AR+IQ in Retail

AR+IQ by iSPARX™ transforms retail environments into intelligent, interactive spaces. Built on advanced spatial computing, it anchors digital content precisely in real-world locations—shop floors, product shelves, or event activations. Customers can view product information, try items virtually, or unlock promotions through their devices. Each interaction is captured ethically through real-time analytics, giving retailers insight into dwell time, user preferences, and sales impact. The AR+IQ SDK integrates seamlessly with existing apps or can operate as a white-label solution, supporting brands that want to merge storytelling, customer engagement, and measurable data within a single AR platform.

Smart Experiences and Engagement

Using AR+IQ, retail experiences move beyond static displays into adaptive, personalised journeys. AI-driven agents act as digital concierges—greeting users, answering questions, and recommending products. Spatially anchored campaigns let brands transform any store into a responsive environment that reacts to movement and intent. From virtual store tours to product visualisation and gamified loyalty experiences, AR+IQ makes shopping intuitive, memorable, and data-rich. Designed for sustainability and scale, it supports phones, tablets, and AR glasses, creating a bridge between digital marketing, physical presence, and customer intelligence.

AR+IQ by iSPARX™

AR+IQ is a modular augmented reality platform that combines spatial computing and artificial intelligence to create immersive, intelligent, and location-aware experiences. It adapts to different sectors through custom verticals designed for Retail, Art & Culture, and Entertainment.

Entertainment

From live music to public installations, AR+IQ brings digital layers to events. It connects audiences and performers through interactive effects, spatial storytelling, and shared AR experiences that extend beyond the stage or screen.

Art & Culture

AR+IQ supports artists, museums, and cultural institutions with tools for immersive storytelling. It anchors 3D content, sound, and narrative in real spaces, allowing audiences to explore culture through location-aware experiences.

Retail

AR+IQ transforms shopping environments into interactive spaces. Digital concierges, product visualisation, and gamified loyalty tools enhance customer engagement and provide real-time insights into behaviour and sales.

The Helen Clark Foundation — Website, Database, and Document Retrieval System

We delivered a full redevelopment of The Helen Clark Foundation’s website, designed to modernise how research content is produced, stored, and accessed. The build includes a secure database architecture for managing publications, policy papers, and archival material, along with a structured document retrieval system that allows users to search, filter, and download resources with accuracy.

The project strengthens the Foundation’s digital presence by improving usability, accessibility, and long-term maintainability. It also provides a scalable backend framework that supports future growth, including new research collections, member services, and reporting tools. The work reflects an evidence‑driven approach—supporting thought leadership, policy engagement, and transparent public communication.

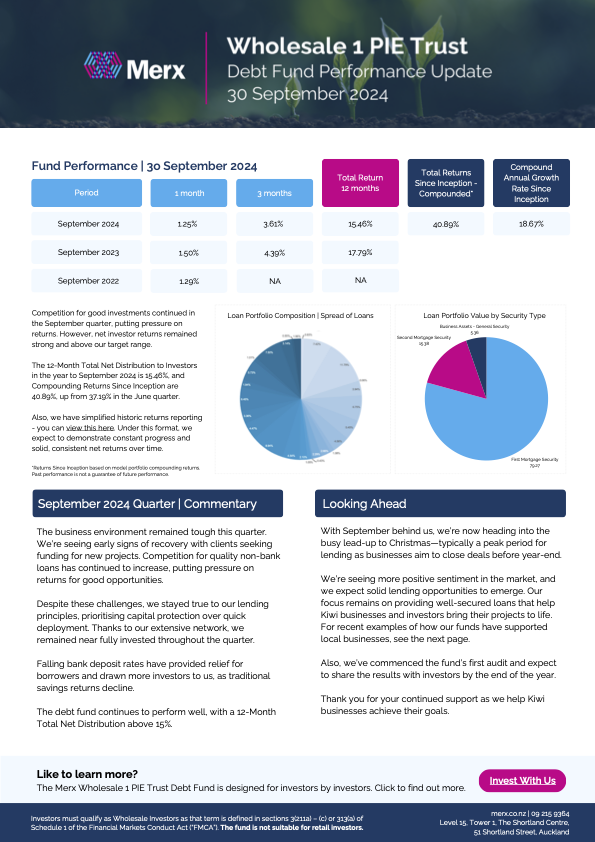

Merx Retail Lending — Automated Lending and Machine Learning Systems

For Merx, we are designing and implementing an automated retail lending workflow that uses AI and machine learning to streamline the full application lifecycle. The system collects and verifies application data, performs real‑time risk assessment, and applies adaptive ML models to support consistent and compliant decision‑making.

This reduces manual bottlenecks and improves processing speed, while maintaining clear auditability for regulatory purposes. The platform is built to scale, allowing Merx to adjust lending criteria, update models, and incorporate new data sources without disrupting operations. The outcome is a more efficient, reliable, and transparent lending process that benefits both customers and internal teams.

Chris Cuffaro Archive — Digital Catalogue and Photography Database

For the Cuffaro project, we are building a structured digital catalogue that transforms decades of iconic music photography into an accessible, searchable, and durable online archive. The system ingests high-resolution images, captures detailed metadata, and organises each asset within a stable, standards-aligned database.

The platform supports precise navigation, allowing users to explore the work by artist, era, session, location, or story. It maintains full provenance and context, ensuring that every photograph can be traced, referenced, and understood within its historical setting.

This reduces the risk of data loss and improves long-term preservation, while providing curators, researchers, and fans with a reliable and transparent way to interact with the collection. The architecture is built to scale, enabling future expansion, new modes of discovery, and integration with emerging creative and spatial viewing tools.

sweetorange.app — Fan Engagement and Live Event Discovery Platform

For Sweet Orange, we are developing a fan-centred mobile platform that reshapes how audiences discover and interact with live music. The application brings together event listings, artist profiles, and personalised recommendations, supported by a structured data layer that tracks engagement patterns and emerging audience behaviour.

The system provides a clear pathway for users to follow artists, receive tailored alerts, and access curated content that deepens their connection with upcoming shows. Its catalogue architecture supports accurate event metadata, real-time updates, and consistent cross-device performance.

This improves discovery for fans while giving artists and organisers better insight into demand and audience trends. The platform is built to scale, allowing Sweet Orange to expand its dataset, integrate new features, and evolve the fan experience without disrupting the core service.