Integrating Ultrasound Positioning into AR+IQ: Enhancing Spatial Intelligence in Real-Time Immersive Environments

Abstract

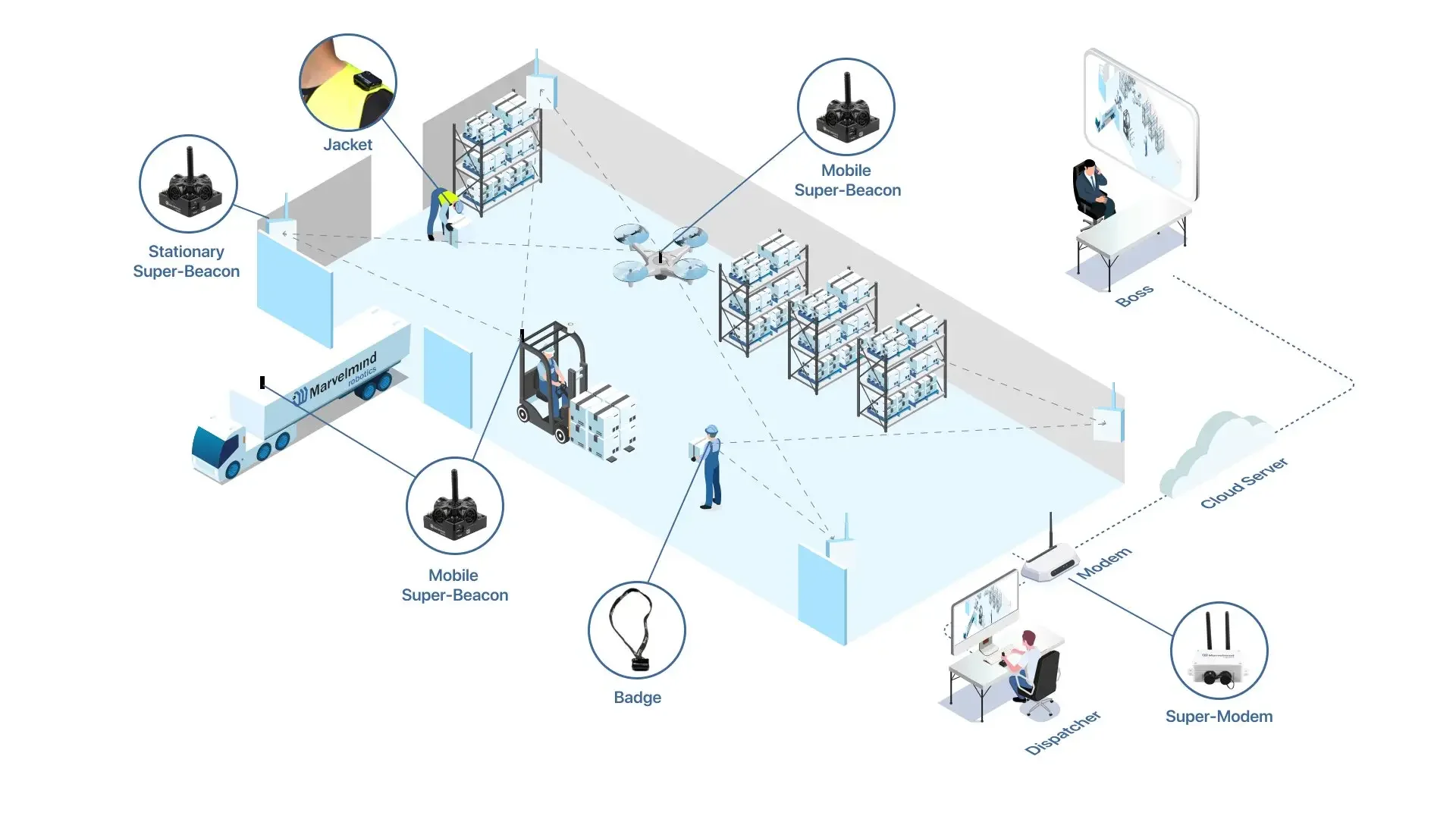

This article outlines the strategic and technical integration of ultrasound-based indoor positioning systems (IPS) into the AR+IQ platform, developed by iSPARX™. AR+IQ is a modular immersive media ecosystem combining Augmented Reality (AR), Artificial Intelligence (AI), and spatial computing. The addition of ultrasonic positioning systems, such as Marvelmind Robotics, enhances AR+IQ’s ability to deliver precise real-time geospatial data in indoor and GPS-denied environments. This article discusses implementation strategies, architectural integration, interoperability with Unity SDK, benefits for cultural, retail, educational and experiential installations, and the challenges addressed by ultrasound technologies in spatial computing.

1. Introduction

AR+IQ by iSPARX™ is a platform that combines advanced 3D media technologies, intelligent agents, and geospatial tools to enable immersive, multi-user, location-aware experiences. Until now, AR+IQ has relied on a combination of GPS, LiDAR, visual anchors, and cloud-based mapping to geolocate and anchor experiences in real-world contexts. However, in indoor environments or areas with low GPS signal fidelity, precision tracking has remained a limitation.

To address this, AR+IQ is now integrating ultrasound-based indoor positioning systems (IPS), beginning with Marvelmind Robotics’ suite of ultrasonic beacon-based tracking tools. This enhancement aligns with AR+IQ’s modular design, supporting seamless interoperability across mobile devices, AR glasses, and installations.

2. Why Ultrasound?

Ultrasound positioning systems provide centimetre-level accuracy in real-time and operate independently of traditional GPS or Wi-Fi-based systems. Marvelmind’s approach uses static ultrasonic beacons and mobile tags (hedgehogs) to triangulate exact positions within defined spaces. These systems are ideal for:

GPS-denied environments (e.g. heritage buildings, underground spaces, galleries)

Multi-user installations where real-time location granularity improves interactivity

Indoor AR navigation (e.g. museums, malls, exhibitions)

Dynamic mapping where spatial accuracy supports trigger-based storytelling

The addition of ultrasound-based IPS supports AR+IQ’s goals to deliver adaptive, intelligent and ethical location-aware storytelling.

3. Technical Architecture

3.1. Modular Framework Compatibility

AR+IQ’s modular structure enables integration of external positioning modules via a middleware interface. The architecture includes:

AR+IQ Core Kernel: Provides base layer integration with ARKit, ARCore, and Unity.

AI Interaction Layer: Manages agentic logic and natural language processing.

Geospatial Mapping Layer: Receives and fuses GPS, LiDAR, and now ultrasonic coordinates.

CMS + SDK Tools: Allow experience designers to assign behaviours and triggers to zones.

Ultrasound beacons feed real-time (X, Y, Z) coordinates into the system, acting as a supplemental source to be fused with camera vision or LiDAR. Depending on environment and use-case, AR+IQ can switch priority to ultrasonic location data.

3.2. Hardware and Data Pipeline

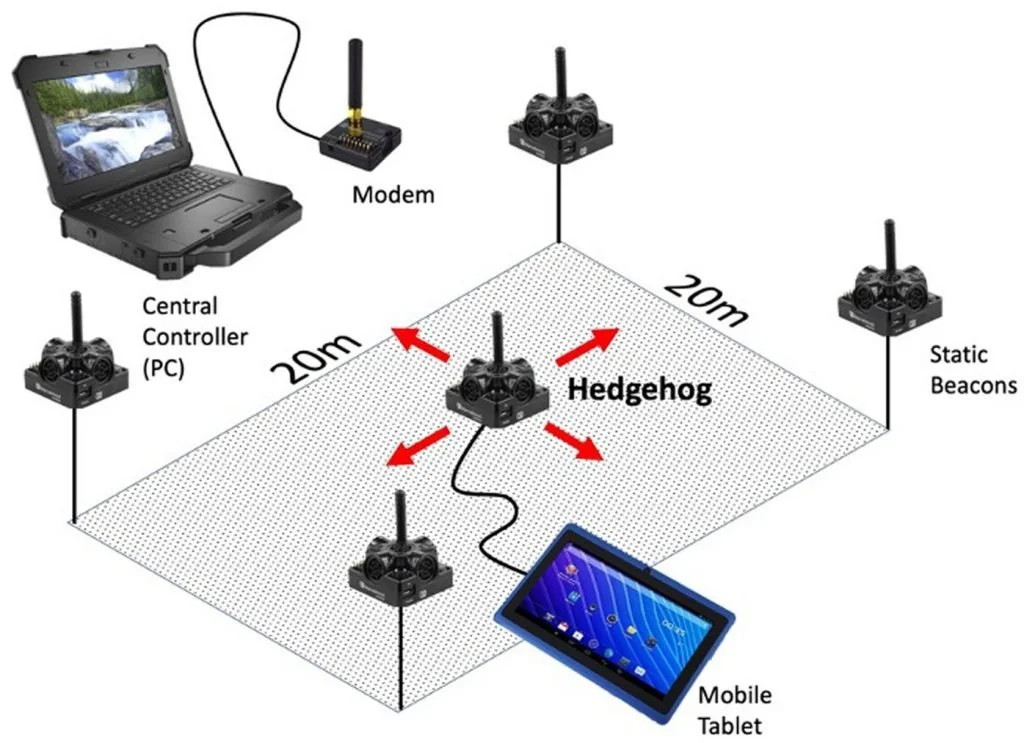

The Marvelmind system includes:

Beacons (static, wall or ceiling mounted)

Mobile Tags (hedgehogs): Worn by participants or embedded in props

Modem: Central controller which sends data to host device (PC or mobile bridge)

Unity Middleware: Custom C# interface layer to bring in serial data via Bluetooth/WiFi

Data Flow:

Beacons emit ultrasonic pulses

Mobile tag detects pulses from 3 or more beacons

Position triangulated and sent to modem

Unity/AR+IQ SDK receives coordinates

CMS applies positional triggers (e.g. proximity events, scene switching)

4. Implementation Strategy

4.1. Prototyping in Indoor Venues

Initial implementation occurred in controlled cultural environments including the Pātaka Art+Museum and gallery exhibition spaces where GPS and Wi-Fi were unreliable. AR+IQ was configured to:

Place ultrasound beacons in symmetrical grid (2.5m - 5m apart)

Assign spatial events to sub-zones (e.g. audio triggers, character movement)

Provide anchor locks for high-precision spatial media

The CMS interface was extended to include ultrasound-specific calibration options and tools to assign content to fixed subzones.

4.2. Unity Integration

The AR+IQ Unity SDK was extended with a MarvelmindPositionListener class to:

Parse incoming serial data (via USB or Wi-Fi bridge)

Translate Marvelmind (mm) coordinates into Unity world units

Apply real-time transform updates to virtual anchors

Monitor position loss or interference and fall back to visual anchors

5. Benefits and Use Cases

Whakairo by Kereama Taepa, Tauranga Art Gallery 2025

5.1. Arts and Cultural Heritage

Interactive installations with high spatial accuracy (e.g. multi-user waka navigation, pou carvings)

Allows for dynamic placement of digital stories around taonga or artefacts

Reliable in heritage buildings where Wi-Fi and GPS are restricted

5.2. Retail and Brand Experience

Indoor product discovery tools that respond to movement and location

AR loyalty experiences triggered by physical proximity (e.g. retail treasure hunts)

Enables wayfinding inside complex venues like malls or airports

5.3. Education and Museums

Gamified learning journeys with geofenced knowledge zones

School or kura projects where tamariki can navigate and learn interactively

Greater accessibility for visually impaired users with location-based audio guides

BEEP retail POC BP / EY Seren, Melbourne 2018

5.4. Events and Live Performance

Dynamic stage or crowd-interactive experiences

Enhances live music apps (e.g. Sweet Orange) by enabling positional fan engagement

Can guide audiences through exhibitions or events using precision spatial cues

6. Ethical and Technical Considerations

6.1. Privacy and Consent

All AR+IQ telemetry tools, including ultrasound tracking, are bound by ethical consent protocols. User location is never stored without permission. Participants are briefed on real-time tracking and given opt-out mechanisms.

6.2. Signal Interference and Failover

Ultrasound can be affected by ambient noise, physical obstructions, or multipath reflection. To ensure robustness:

AR+IQ uses sensor fusion (LiDAR, vision, IMU, ultrasound)

Anchors have fallback layers (e.g. visual QR markers)

Beacons are mounted strategically to reduce echo artifacts

6.3. Maintenance and Calibration

Beacons need semi-regular calibration depending on venue use. AR+IQ tools allow remote diagnostics and real-time monitoring of beacon health.

7. Future Development

7.1. AR Glasses and Wearables

We are exploring embedding Marvelmind hedgehogs into AR+IQ-compatible wearables such as AR glasses or artist lanyards. This allows frictionless multi-user tracking in shared spaces without requiring handheld devices.

7.2. Machine Learning for Spatial Prediction

Future iterations will integrate ML models that predict user paths and optimise anchor placement in real time. This will reduce drift and improve low-beacon environments.

7.3. Cross-Zone Story Continuity

Ultrasound subzones can allow dynamic handover between installations in large-scale exhibitions or festivals. A user can carry their AR+IQ experience from one venue into another without manual restart.

8. Conclusion

Integrating ultrasound positioning into AR+IQ represents a major step forward for immersive spatial computing. It solves core limitations in GPS-denied spaces and brings new levels of interactivity and precision to AR+IQ-powered experiences. Whether guiding visitors through cultural installations, enabling product interaction in retail, or supporting co-creative learning in education, ultrasound technology allows us to build ethical, location-aware digital experiences that are deeply rooted in real-world spatial logic.

This alignment between physical space and virtual behaviour is central to the kaupapa of iSPARX™: to change how we interact with the world.

All features noted here are under active development within the AR+IQ platform as of 2025, with core infrastructure provided by Marvelmind Robotics and integration facilitated by Unity SDK extensions.